This blog was written by Roy Carr-Hill.

It has often been lamented that girls are more likely to drop out (for example, because of gender preference by the parents, pregnancy or danger among many other postulated factors), or perform worse than boys. To help with measuring drop-out rates, UNESCO introduced the Gender Parity Index, which measures the relative participation of boys and girls at each schooling level. In relation to measuring girls’ performance, the focus for example, in the evaluations carried out of programmes funded through the Girls’ Education Challenge (GEC) framework, has been on girls’ test scores or their transition rate between cycles.

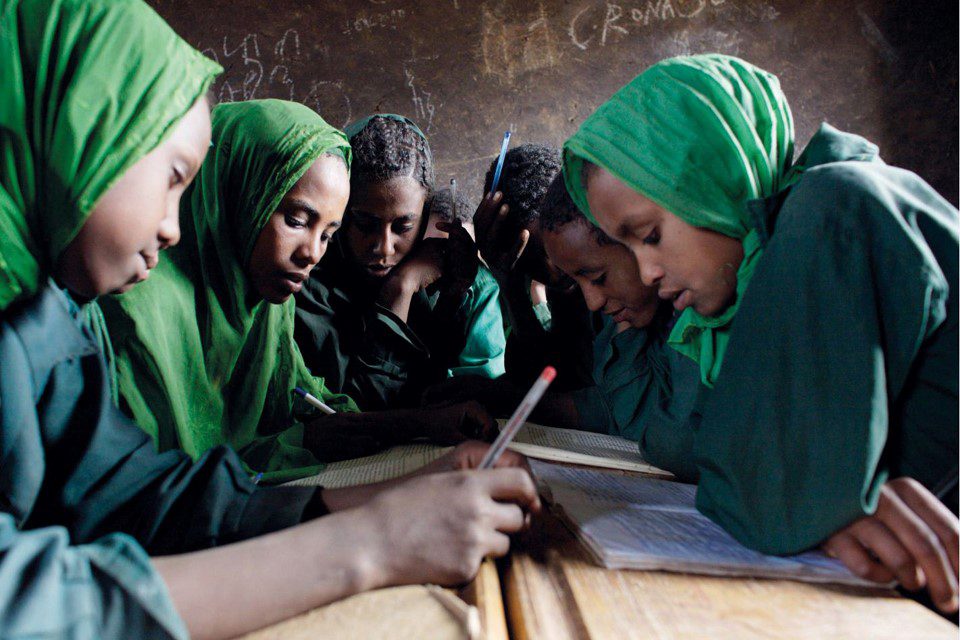

DFID is committed to get more girls into school and improve their lives through education. Picture: Gary Calaf/Save the Children Fund.

For example, the GEC evaluation team randomly select two groups of girls from schools in marginal or vulnerable areas (one to be the intervention group and one the control group), follow them both over time and compute differences at each follow-up. Whilst this is a plausible design, it probably does not take sufficient account of the heterogeneity of schools, not only in general, but specifically in context of multiple differences between ethnicities, etc.

The typical response to this set of potential confounders is to collect a wide range of information: about the schools, their teachers, the ambience and, to the extent that it is possible within the overall financial constraints of the evaluation budget, observe (or video) classes to see how the pedagogical approaches are or are not differentiating between more vulnerable and less vulnerable children. Worldwide literature shows that:

- There is considerably more variation between schools in developing countries than in developed countries. There is also a more consistent and stronger positive effect of material and human resource input factors in developing countries as well as inconclusive and weak evidence on the effect of instructional factors that have received empirical support in industrialised countries (Scheerens, 1999; Scheerens, 2000; Scheerens, 2001a; Scheerens, 2001b).

- Instead, where the relation being studied is between inputs and student performance, the estimated relations (Hanushek, 1997) are inconsistent and, even with sophisticated statistical adjustments, there are still substantial residual heterogeneities.

The solution proposed here is, in addition to collecting basic information about the school and the teachers, to ‘leapfrog’ the problem of disentangling all the other confounders from the intervention effect by monitoring/ scoring /testing a sample of boys in the same class/ school. Whatever non-intervention school effects there are, there is no reason to believe that they will not be reflected equally in the performance of boys and girls. The outcome then becomes not the straightforward comparison of girls’ performance in intervention and control groups but the difference between girls and boys in the intervention group, compared to the difference between girls and boys in the control group. This will provide more consistent estimates, because all confounders are being controlled for, and will substantially reduce the need for complex statistical manipulation, and therefore make the results more ‘readable’ for policymakers and other stakeholders.