This blog was written by Angela W Little, Professor Emerita, UCL Institute of Education, and UKFIET Trustee.

This blog extends an earlier discussion of the report by the Global Education Evidence Advisory Panel (GEEAP): Cost-effective Approaches to Improve Global Learning Levels, in which a number of Randomised Controlled Trial (RCT) experiments in education are synthesised In an earlier blog, I paid particular attention to the findings on Teaching at the Right Level – or TaRL – which essentially means ability grouping, allocating children to groups for learning based on their level of achievement and teaching them from this level[i]. In this blog I do not wish to suggest that we should ‘teach at the wrong level’ – who would?!

Rather, I reflect on the synthesis of the individual studies, many of which are of very high quality. I interrogate the question ‘What works?’ and suggest a series of refinements to this question if answers are to be of use to policymakers and practitioners. In so doing I raise questions about:

- picking flowers and leaves

- inclusion and equity

- replication

- the reporting negative and null effects, and

- scalability, costs and sustainability

- Picking flowers and leaves

The words of Michael Sadler, a comparative educationalist, writing more than a century ago, are as true now as they were then:

“We cannot wander at pleasure among the educational systems of the world, like a child strolling through a garden and pick off a flower from one bush and some leaves from another, and then expect that if we stick what we have gathered into the soil at home, we shall have a living plant.”

In their attempt to identify ‘smart buys’ and ‘poor buys’ this is what the authors of the report Cost-effective Approaches to Improve Global Learning Levels have done. They offer a ‘great buy’ flower from here and a ‘bad buy’ leaf from there. The system characteristics – the soil if you like – that nurture the flower or leaf are almost totally ignored.

This question of the soil, context and system is of importance to national policymakers and indeed to all of us. What national policymakers and their officers want to know is: What is the most cost-effective approach to improving learning in my country or area, taking account of system characteristics that are fixed and those that might be reformed? It goes without saying that we can all benefit from an awareness of the diversity of educational arrangements around the world – but what national policymakers need equally, if not more, is a bank of well-conducted studies from their own system in line with questions about learning which they have defined. Only then is attention likely to paid to the design, methods and findings of studies from elsewhere that address similar or related questions.

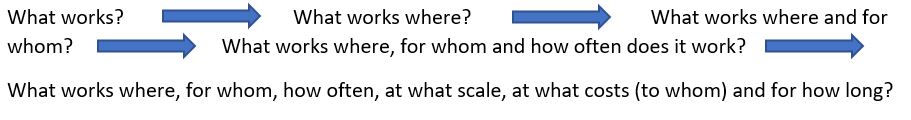

An initial refinement of What works? is: ‘What works where?’

- Inclusion and equity effects

The authors of many studies and/or those who synthesise studies report the effects of an intervention across a sample and not on sub-groups within a sample. So, for example, TaRL may work well for the high achievers and generate an overall positive effect but they may work less well for low achievers and widen learning gaps. Indeed, there are several studies in the more general education literature that show that ability grouping leads to a widening rather than a narrowing of the learning gap between high and low achievers.

So let us refine What works where? a little further: ‘What works, where and for whom?’.

- Replication

Replication is a fundamental requisite of a body of knowledge that claims generalisability. Despite the clustering of TaRL interventions which bear surface similarities, each reported intervention differs from the next. Some interventions employed NGO volunteers, some mainstream teachers, some lengthened the school day, some offered learning camps during school holidays. None of the TaRL, or indeed other studies cited in the synthesis report, is a replication of another.

This absence of replication would not be an acceptable approach to those who employ RCTs in health research, the field of knowledge that has inspired the use of RCTs in education. In health, many types of trial on large numbers of people in diverse countries are typically employed before a new drug or vaccine is approved for use.

- The reporting of negative and null effects

Aid agencies are understandably keen to report positive findings of intervention programmes, especially those they may have sponsored. But a careful reading of the research that lies behind such claims often exposes as many negative and null effects as positives.

In the case of the GEEAP synthesis report, the negatives are underplayed. The report notes that half of all the interventions that have cost data (and many did not) produced no significant effects. These are then omitted from further mention. Even when the original research has reported negative or null effects, these are not taken account of. A case in point is the way the authors of the synthesis report employ findings from the TaRL studies in India. The original research paper reports 19 treatment effect estimates, of which 10 are positive, while 9 show no positive effect. These ‘no effect’ estimates appear to disappear when the paper is used in the synthesis report. Why is this?

This is an issue for education researchers generally and not just the authors of the GEEAP report. The use and explanation of null and negative findings is as important as the positive. Positive findings increase the probability that a hypothesis is true; null and negative findings challenge the researchers’ assumptions and can lead to improved hypotheses and theory. We need to report, embrace and reflect on the negative and the null rather more in the future.

So let us refine our question even further: ‘What works, where, for whom and how often does it work?’

- Scalability, costs and sustainability

Even if we find that an intervention works well for most children, most of the time we are still left with issues of scalability, costs and sustainability.

To what extent might an intervention be scaled up over space and sustained over time within a system?[ii] I take another piece of TaRL research cited in the synthesis report: a study of Grade 1 classes in 121 schools in Western Kenya in 2005. These schools received funds to hire an extra Grade 1 teacher, allowing the size of Grade 1 classes to be halved. In the randomly selected intervention classes, initial test scores were used to assign students to one of two groups, one where students had achieved higher than average initial scores and the other where students had achieved lower than average initial scores. The assumption was that the teachers would teach ‘at the right level’ for the students – one higher and one lower. In the control classes, students were assigned randomly to one of the two sections, they were not assigned on ability. After 18 months, test scores were 0.14 standard deviation points higher in the treatment classes than in the control classes. This was a positive result from a well conducted and reported individual research study.

But, before jumping to the conclusion that this is how all Grade 1 students should be taught and that this type of programme be implemented across all grades in a school, across all schools in Kenya – or, even more ambitiously, across all grades in all schools across all low- and middle-income countries – there are issues of scalability, cost and sustainability. Critical to this intervention was the allocation of an extra Grade 1 teacher to each school and the halving of class sizes. If this intervention was introduced system-wide, this would mean doubling the teacher salary budget, which effectively means doubling the size of the entire education budget, and paying salaries before paying for any of the other ‘smart buys’. In turn, this raises the question of who pays the costs and can these be sustained over time.

So the question ‘What works?’ needs to be refined further still to address: ‘At what scale, at what costs (to whom) and for how long?’

In summary then, let us return to the question ‘What works?’ and suggest a series of refinements of value for policymakers and practitioners.

What works where, for whom, how often, at what scale, at what costs (to whom) and for how long?

And, for the research community, we might add – AND WHY? – to each of the above.

[i] Only studies adopting an RCT design were included in the synthesis. Other relevant TaRL research, especially from the MGML and ABL interventions in India, was not addressed. See for example, Education Initiatives (2015) Evaluation of Activity-Based Learning as a Means of Child-Friendly Education, Final Report to UNICEF, Delhi and RIVER.

[ii] For a broader discussion on the external validity of RCTs and of their value within development thinking more generally, see Rolleston, C. (2016) Systematic Reviews: can they tell us what works in education? and Pritchett, L. (2019) Randomising Development; method or madness?